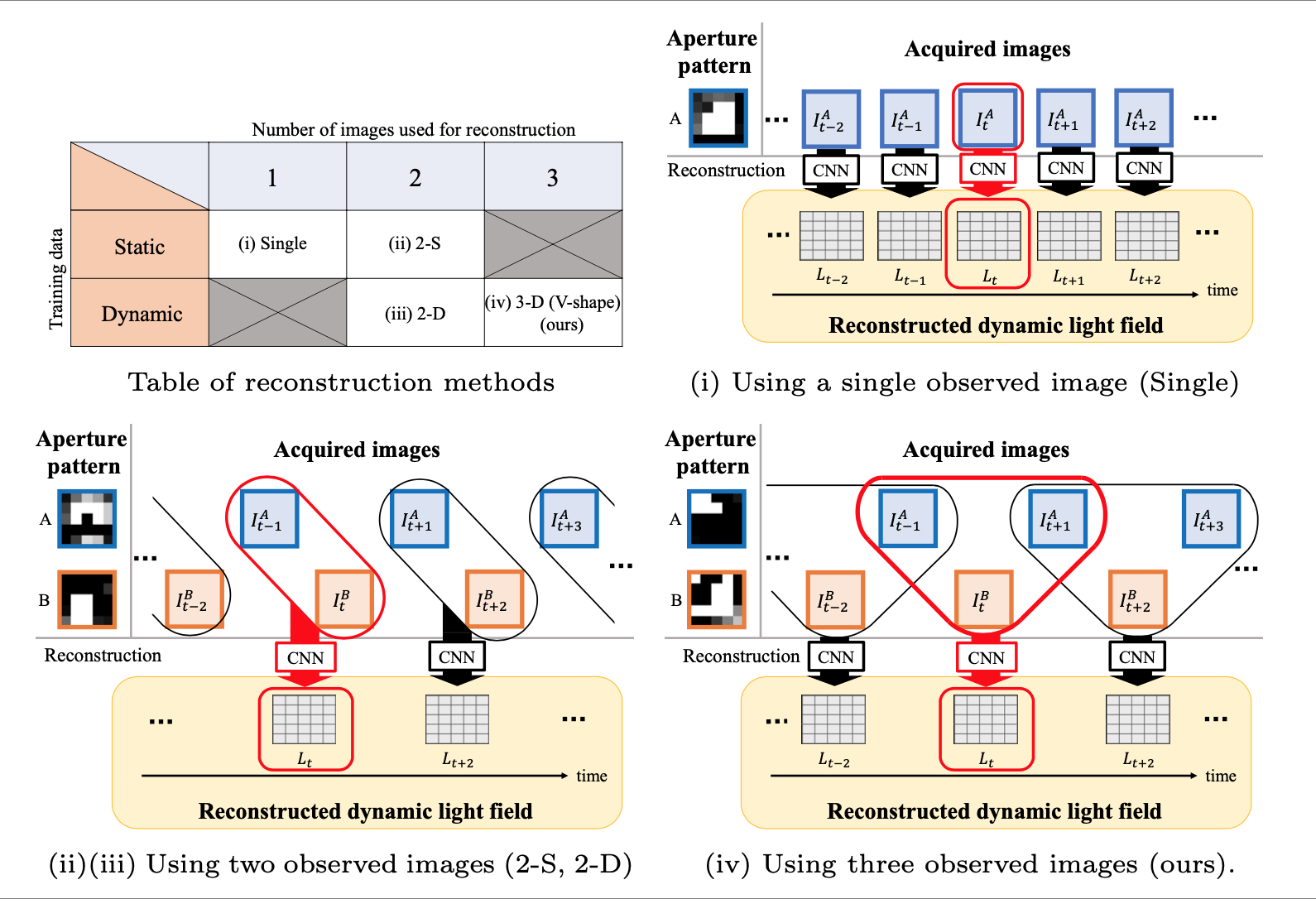

We investigate the problem of compressive acquisition of a dynamic light field. A promising solution for compressive light field acquisition is to use a coded aperture camera, with which an entire light field can be computationally reconstructed from several images captured through differently-coded aperture patterns. With this method, it was assumed that the scene should not move throughout the complete acquisition process, which restricted real applications. In this study, however, we assume that the target scene may change over time, and propose a method for acquiring a dynamic light field (a moving scene) using a coded aperture camera and a convolutional neural network (CNN). To successfully handle scene motions, we develope a new configuration of image observation, called V-shape observation, and trained the CNN using a dynamic-light-field dataset with pseudo motions. Our method is validated through experiments using both a computer-generated scene and a real camera.

Toshiaki Fujii (Professor)

Keita Takahashi (Associate Professor)

Kohei Sakai (former graduate student: -- 2021.3)

Hajime Nagahara (Professor, Osaka University)

Our software (using Python + Chainer) with sample data is now available. Please find the "readme.txt" file for the terms of use and usage. [Get our software ] [Get additional data ] [Planets scene POV file]

2021/4/14 new! PyTorch version (ported from our former Chainer version) is now available. [Get our software ]

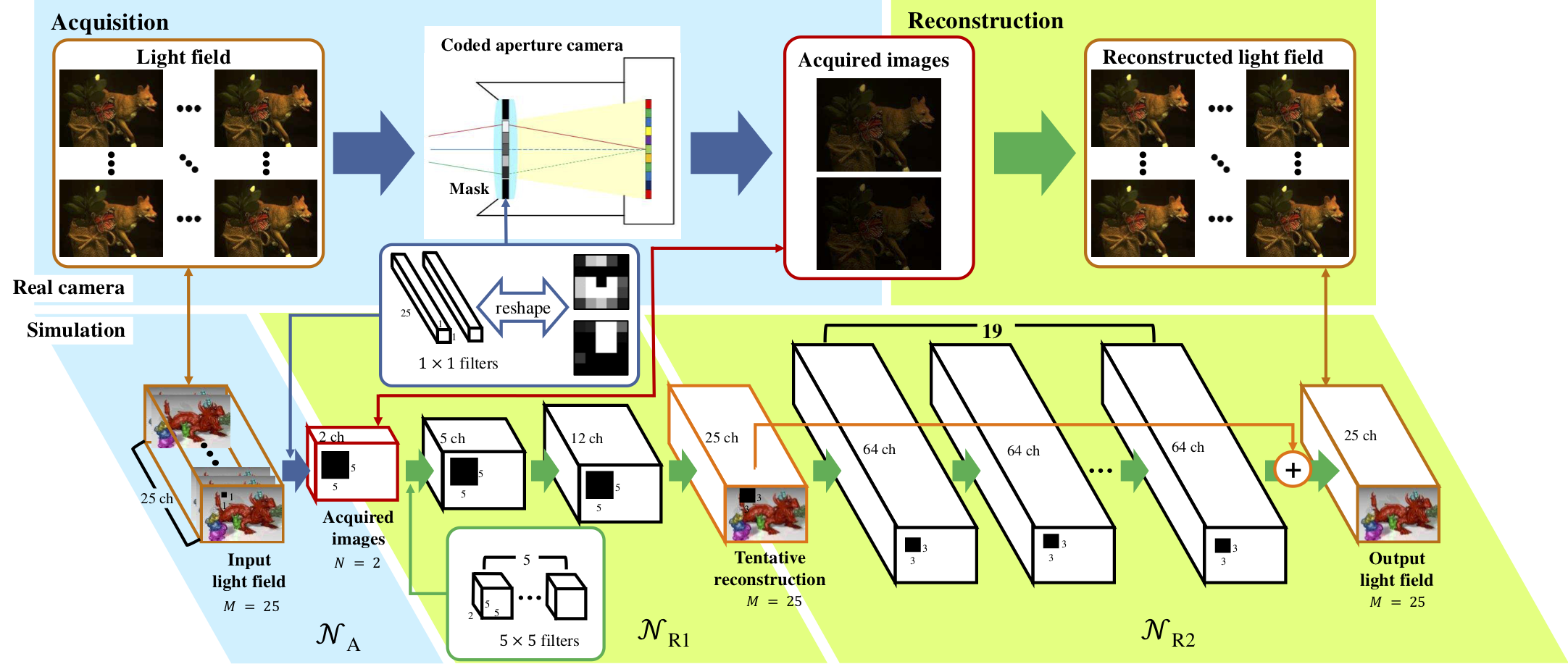

Our CNN models the entire pipeline from capture to reconstruction of a light field.

We propose a learning-based framework for acquiring a light field through a coded aperture camera. Acquiring a light field is a challenging task due to the amount of data. To make the acquisition process efficient, coded aperture cameras were successfully adopted; using these cameras, a light field is computationally reconstructed from several images that are acquired with different aperture patterns. However, it is still difficult to reconstruct a high-quality light field from only a few acquired images. To tackle this limitation, we formulated the entire pipeline of light field acquisition from the perspective of an auto-encoder. This auto-encoder was implemented as a stack of fully convolutional layers and was trained end-to-end by using a collection of training samples. We experimentally show that our method can successfully learn good image-acquisition and reconstruction strategies. With our method, light fields consisting of 5 x 5 or 8 x 8 images can be successfully reconstructed only from a few acquired images. Moreover, our method achieved superior performance over several state-of-the-art methods. We also applied our method to a real prototype camera to show that it is capable of capturing a real 3-D scene.

Toshiaki Fujii (Professor)

Keita Takahashi (Associate Professor)

Yasutaka Inagaki (former graduate student: -- 2020.3)

Yuto Kobayashi (former graduate student: -- 2018.3)

Hajime Nagahara (Professor, Osaka University)

Our software (using Python + Chainer) with sample data is now available. Please find the "readme.txt" file for the terms of use and usage. [ Get our software ] [ Trained models ( 2020/4/30 updated! ) ]

Raw experimental material using our prototype camera [ Get raw data ]

A light field, which is often understood as a set of dense multi-view images, has been utilized in various 2D/3D applications. Efficient light field acquisition using a coded aperture camera is the target problem considered in this paper. Specifically, the entire light field, which consists of many images, should be reconstructed from only a few images that are captured through different aperture patterns. In previous work, this problem has often been discussed from the context of compressed sensing (CS), where sparse representations on a pre-trained dictionary or basis are explored to reconstruct the light field. In contrast, we formulated this problem from the perspective of principal component analysis (PCA) and non-negative matrix factorization (NMF), where only a small number of basis vectors are selected in advance based on the analysis of the training dataset. From this formulation, we derived optimal non-negative aperture patterns and a straight-forward reconstruction algorithm. Even though our method is based on conventional techniques, it has proven to be more accurate and much faster than a state-of-the-art CS-based method.

Toshiaki Fujii (Professor)

Keita Takahashi (Associate Professor)

Yusuke Yagi (former graduate student: -- 2018.3)

Hajime Nagahara (Professor, Osaka University)

Toshiki Sonoda (graduate student, Kyushu University)

Aperture patterns derived by PCA and NMF are available [Download].